Institute For The Future (IFTF):

KitKat:

Epistemologia della macchina 2016

giovedì 25 febbraio 2016

mercoledì 17 febbraio 2016

Homework #6 - Taxonomy of a Machine

Machine Learning Algorithms

| Paradigm | Learning rule | Architecture | Learning algorithm | Task |

| Supervised | Error-correction | Single or multilayer perceptron | Perceptron rule, Stochastic gradient descent, Back propagation, BP+reinf, SBPI | Pattern Classification, Functions Approximation, Prediction, Control |

| Convolutional Networks | Stochastic gradient descent, Back propagation | Classification, Computer Vision, Speech recognition | ||

| Auto-encoders | Gradient Descent | Data compression, Preprocessing | ||

| Competitive | Competitive | Learning Vector Quantization | Within Class Categorization, Data Compression | |

| Unsupervised | Hebbian | Recurrent | Hebb rule | Denoising, Attractor Learning |

| Hopfield Network | Hebb rule, Inference (BP, TAP, Montecarlo) | Memory, Denoising, Attractor Learning | ||

| Boltzmann | Boltzamann Machine | Contrastive divergence, Statistical Inference | Feature Learning, Preprocessing, Denoising, Generative model | |

| Kohonen's SOM | Kohonen's SOM | Kohonen's SOM | Data Analysis |

Supervised VS Unsupervised

Data can be labeled or not

martedì 16 febbraio 2016

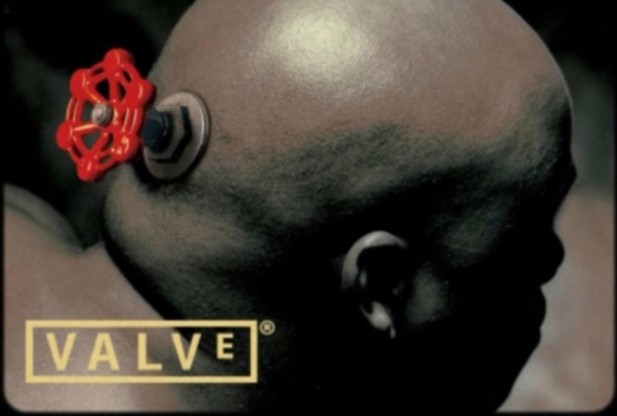

Homework #5 - Logos&Machines

Valve Software, an American video game developer and digital distribution company headquartered in Bellevue, Washington (US), founded in 1996.

Bad Robot Productions (known as only Bad Robot when first founded in 2001) is an American film and television production company owned by J. J. Abrams.

Bad Robot Productions (known as only Bad Robot when first founded in 2001) is an American film and television production company owned by J. J. Abrams.

Homework #4 - Words&Machines

Perceptron -- a perceiving and recognizing self-operating machine (F. Rosenblatt, 1957).

Adaline -- Adaptive Linear Neuron, is an early single-layer artificial neural network using memistors. Developed by Professor Bernard Widrow in 1960, it is based on the McCulloch–Pitts neuron. It consists of a weight, a bias and a summation function.

Memristor -- portmanteau of memory resistor, is a non-linear passive two-terminal electrical component relating electric charge and magnetic flux linkage. The memristor's present electrical resistance depends on how much electric charge has flowed in what direction through it in the past. The device remembers its history—the so-called non-volatility property. When the electric power supply is turned off, the memristor remembers its most recent resistance until it is turned on again.

Adaline -- Adaptive Linear Neuron, is an early single-layer artificial neural network using memistors. Developed by Professor Bernard Widrow in 1960, it is based on the McCulloch–Pitts neuron. It consists of a weight, a bias and a summation function.

Memristor -- portmanteau of memory resistor, is a non-linear passive two-terminal electrical component relating electric charge and magnetic flux linkage. The memristor's present electrical resistance depends on how much electric charge has flowed in what direction through it in the past. The device remembers its history—the so-called non-volatility property. When the electric power supply is turned off, the memristor remembers its most recent resistance until it is turned on again.

Homework #3 - The Machine of my Doctorate

Artificial neural networks

Inspired strongly from the visual system, the Multi-layer Perceptron is a device capable of performing a classification task, learning from set of input-output associations often called training set. The presence of many layers of interconnected artificial neurons allows for a sequential processing of the raw data in input, and for the representation of arbitrarily complex non-linear functions of it. The parameters of the device are usually referred to as synaptic weights: these are able to capture complex structures (features) in the inputs, which, after the training is complete, can be used to obtain a correct classification when an unseen pattern is presented. This mechanism is likely very similar to the learning process taking place in the brain.

Inspired strongly from the visual system, the Multi-layer Perceptron is a device capable of performing a classification task, learning from set of input-output associations often called training set. The presence of many layers of interconnected artificial neurons allows for a sequential processing of the raw data in input, and for the representation of arbitrarily complex non-linear functions of it. The parameters of the device are usually referred to as synaptic weights: these are able to capture complex structures (features) in the inputs, which, after the training is complete, can be used to obtain a correct classification when an unseen pattern is presented. This mechanism is likely very similar to the learning process taking place in the brain.

Iscriviti a:

Post (Atom)